Overview

We present the True Sense system with software plugin and paired gloves to support 3D painting and modeling applications in Mixed Reality, such as HoloStudio. True Sense can recognize materials of physical objects and embed holographic content that demonstrates the corresponding information. By integrating True Sense into HoloStudio, we aim to augment haptic feedback for adding texture to virtual objects as well as promoting exploration and material learning in the real world.

True Sense Components

Plugin

The plugin comes with a local material library and a cloud material database once it is installed into the application. The local material library stores the fundamental materials with their texture data as well as the specific vibrating patterns for the gloves. The plugin is designed to cultivate the customized material library based on individual preferences and usage frequency. Users can update this material library with the latest material data which users discover in the daily life.

The Cloud Material Database

The Cloud Material Database is built on the Google Cloud Vision API (GCV), and we have our own image catalog based on different materials of physical objects. We collect a variety of images of different objects, and classify them by materials. The Cloud Material Database includes a variety of specific categories, such as glass, iron, aluminium, steel and so on. We use our own Cloud Material Database to identify materials of a diversity of objects. When the local material library could not detect or find the materials of the captured object in its own library, the plugin will access the cloud Material Database Service to match the materials in its cloud database. The GCV-based cloud Material Database collects all kinds of material data from existing sources online as well as users’ uploads and then trains them with a deep neural network through machine learning. The database can also store users' materials when the local library reaches capacity.

Gloves

Each side of the True Sense Glove is constructed with three linear coin-shaped actuators placed on the back of each finger to form an array of 14 actuators in total (Figure 1). Based on this, True Sense Gloves map small the actuators as their surface area as same to each region on a human finger. For example, if the index finger is flexed on the glove, the user will feel the vibration from the three actuators on the index finger of the glove, simulating the human haptic sensation of the physical object. The actuators employ lateral force in order to display 2.5 dimensional geometrical shape, which is the texture of material in the plugin material library. By combining gloves and the haptic illusion, the True Sense system presents virtual bump illusions.

The plugin comes with a local material library and a cloud material database once it is installed into the application. The local material library stores the fundamental materials with their texture data as well as the specific vibrating patterns for the gloves. The plugin is designed to cultivate the customized material library based on individual preferences and usage frequency. Users can update this material library with the latest material data which users discover in the daily life.

The Cloud Material Database

The Cloud Material Database is built on the Google Cloud Vision API (GCV), and we have our own image catalog based on different materials of physical objects. We collect a variety of images of different objects, and classify them by materials. The Cloud Material Database includes a variety of specific categories, such as glass, iron, aluminium, steel and so on. We use our own Cloud Material Database to identify materials of a diversity of objects. When the local material library could not detect or find the materials of the captured object in its own library, the plugin will access the cloud Material Database Service to match the materials in its cloud database. The GCV-based cloud Material Database collects all kinds of material data from existing sources online as well as users’ uploads and then trains them with a deep neural network through machine learning. The database can also store users' materials when the local library reaches capacity.

Gloves

Each side of the True Sense Glove is constructed with three linear coin-shaped actuators placed on the back of each finger to form an array of 14 actuators in total (Figure 1). Based on this, True Sense Gloves map small the actuators as their surface area as same to each region on a human finger. For example, if the index finger is flexed on the glove, the user will feel the vibration from the three actuators on the index finger of the glove, simulating the human haptic sensation of the physical object. The actuators employ lateral force in order to display 2.5 dimensional geometrical shape, which is the texture of material in the plugin material library. By combining gloves and the haptic illusion, the True Sense system presents virtual bump illusions.

Figure 1. (Credit to Samarth.)

Figure 2. (Credit to Samarth.)

Feature 1

Enabling User Sensing Virtual Content

Enabling User Sensing Virtual Content

Users are able to sense and manipulate the virtual content within the HoloStudio application. This type of user interaction is augmented through the real-time haptic feedback provided by True Sense gloves when users select a specific object to interact with.

How It Works

Object Selection

When the user is wearing the True Sense Gloves to select/pick up a virtual object in the HoloStudio, HoloLens uses its motion cameras to track the movement of the True Sense Gloves, as well as user gestures (Figure 3&4).

When the user is wearing the True Sense Gloves to select/pick up a virtual object in the HoloStudio, HoloLens uses its motion cameras to track the movement of the True Sense Gloves, as well as user gestures (Figure 3&4).

Figure 3. Users click the button “TS” to open this plugin

Figure 4.

Object Recognition

The tracking information then is sent to HoloStudio, where it processes the tracking information and maps the position of the gloves onto the location of the virtual object. By this way, HoloStudio identifies the specific virtual object that is being selected by the user. Then, HoloStudio sends the feedback of successfully selected virtual objects to the user through the pop out notification on the user interface (Figure 5).

The tracking information then is sent to HoloStudio, where it processes the tracking information and maps the position of the gloves onto the location of the virtual object. By this way, HoloStudio identifies the specific virtual object that is being selected by the user. Then, HoloStudio sends the feedback of successfully selected virtual objects to the user through the pop out notification on the user interface (Figure 5).

Figure 5.

Haptic Feedback

At the same time, HoloStudio sends the object data of the selected virtual object to the installed True Sense Plugin. The object data stores the depth information of a type of material, such as the distance between two bumps and the size of bumps displayed in a grained wood material. The plugin retrieves the data and calls out the corresponding texture data which maps the frequency of vibration related to the depth information. The texture data is then translated into the vibrotactile pattern, which guides the actuators on the gloves to vibrate in certain frequency. By this approach, the gloves provide the simulation of the texture from the selected object.

At the same time, HoloStudio sends the object data of the selected virtual object to the installed True Sense Plugin. The object data stores the depth information of a type of material, such as the distance between two bumps and the size of bumps displayed in a grained wood material. The plugin retrieves the data and calls out the corresponding texture data which maps the frequency of vibration related to the depth information. The texture data is then translated into the vibrotactile pattern, which guides the actuators on the gloves to vibrate in certain frequency. By this approach, the gloves provide the simulation of the texture from the selected object.

User Journey And System Flow

(Figure 6)

(Figure 6)

Figure 6.

Pseudocode - HoloStudio

Import library for HoloLens

Import library for True Sense Plugin

Import library for True Sense Gloves

Set motion data from HoloLens camera = input

Set still photo = input

Set identifier = object data

Set vibrotactile pattern data = output

Setup

Turn on the camera for tracking gloves

Connect the True Sense Gloves

Draw

Read motion data from gloves

if motion data stays on one object

Take a still photo of the object

Recognize the object from the photo

if the object is identified in the local material library from plugin

Call out the identifier of the object

Write identifier to local material library from plugin

Create outline to highlight the object

else

keep trying to identify the object

Print “Please try again.”

Import library for True Sense Plugin

Import library for True Sense Gloves

Set motion data from HoloLens camera = input

Set still photo = input

Set identifier = object data

Set vibrotactile pattern data = output

Setup

Turn on the camera for tracking gloves

Connect the True Sense Gloves

Draw

Read motion data from gloves

if motion data stays on one object

Take a still photo of the object

Recognize the object from the photo

if the object is identified in the local material library from plugin

Call out the identifier of the object

Write identifier to local material library from plugin

Create outline to highlight the object

else

keep trying to identify the object

Print “Please try again.”

Pseudocode - True Sense Plugin

Import library for HoloLens

Import library for HoloStudio

Import library for True Sense Gloves

Set identifier = object data

Setup

Connect the True Sense Gloves

Draw

Read the identifier from HoloStudio

Use identifier to search the local material library

if the identifier matches the stored material data

Call out the correspondent vibrotactile pattern data for that identifier

Write the vibrotactile pattern data to Gloves

Import library for HoloStudio

Import library for True Sense Gloves

Set identifier = object data

Setup

Connect the True Sense Gloves

Draw

Read the identifier from HoloStudio

Use identifier to search the local material library

if the identifier matches the stored material data

Call out the correspondent vibrotactile pattern data for that identifier

Write the vibrotactile pattern data to Gloves

Pseudocode - True Sense Gloves

Import library for HoloLens

Import library for HoloStudio

Import library for True Sense Plugin

Set vibrotactile pattern data = input

Set vibration = output

Setup

Connect the HoloStudio

Draw

Read the vibrotactile pattern data

Write the vibration

Import library for HoloStudio

Import library for True Sense Plugin

Set vibrotactile pattern data = input

Set vibration = output

Setup

Connect the HoloStudio

Draw

Read the vibrotactile pattern data

Write the vibration

Feature 2

Enabling User Capturing and Recognizing Materials from Physical Object

Enabling User Capturing and Recognizing Materials from Physical Object

True Sense encourages designers and artists to explore, discover and learn a variety of materials from which physical objects can be made. With the help of the depth sensing capabilities of Microsoft HoloLens, True Sense can recognize different objects and embeds holographic content that identifies the corresponding materials.

How It Works

Object Selection

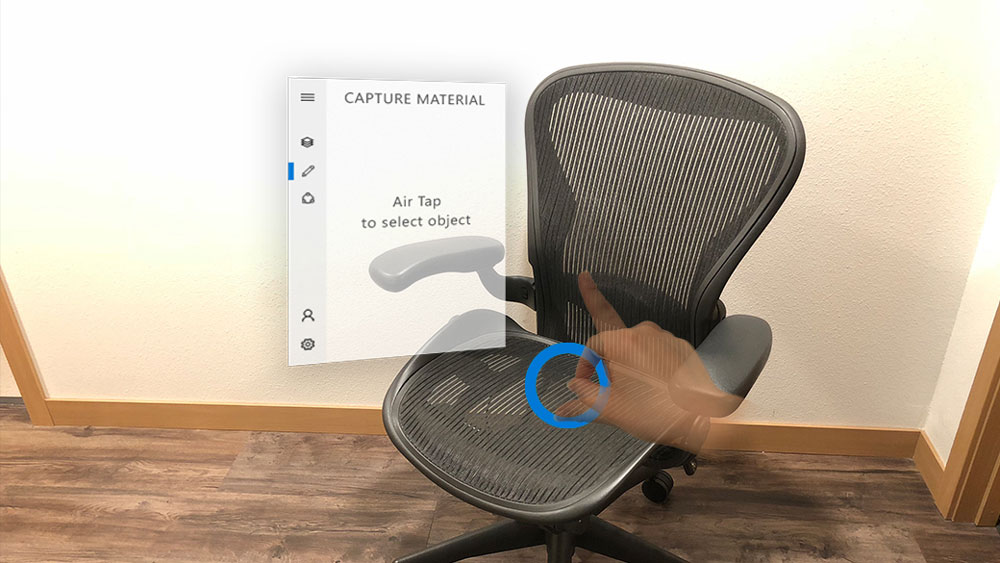

The user uses Air tap gesture to select the physical object within the range of HoloLens tracking cameras. Then the HoloLens recognizes hand gesture by tracking the portion of either or both hands that are visible to the device. HoloLens uses the gesture-sensing camera to see whether the movement of the user’s hand will be within or outside of the gesture frame (Figure 7&8).

The user uses Air tap gesture to select the physical object within the range of HoloLens tracking cameras. Then the HoloLens recognizes hand gesture by tracking the portion of either or both hands that are visible to the device. HoloLens uses the gesture-sensing camera to see whether the movement of the user’s hand will be within or outside of the gesture frame (Figure 7&8).

Figure 7.

Figure 8.

Material Recognition

After the user presses his finger down to tap or select the target object, an image of this object will be captured by the front-facing camera on the HoloLens. During the object recognition process, HoloStudio will prompt the selection indicator on the user interface to provide feedback to the user. The recognition through True Sense local material library will take 3-5s, while through the cloud database will take between 5-10s, depends on the speed of the network.

Material Information Display

After the system recognizes the material of this specific object, the HoloLens will connect to a remote server hosted in the Amazon Web Services platform to obtain dynamically linked content from multiple sources and display in on the physical object. Then the HoloLens’ aggregated depth sensing data will generate environment meshes, and then users can see the name and description of the material embedded directly on top of the identified object. Although GCV returns text in English, nouns can be translated effectively by incorporating services such as Google Translate to target multiple languages. Designers and artists are also able to understand how physical objects are made from various materials through short video clips displayed in True Sense. The embeddings of video clips in Mixed Reality that demonstrate usage of this material would promote learning about materials in the wild (Figure 9).

After the user presses his finger down to tap or select the target object, an image of this object will be captured by the front-facing camera on the HoloLens. During the object recognition process, HoloStudio will prompt the selection indicator on the user interface to provide feedback to the user. The recognition through True Sense local material library will take 3-5s, while through the cloud database will take between 5-10s, depends on the speed of the network.

Material Information Display

After the system recognizes the material of this specific object, the HoloLens will connect to a remote server hosted in the Amazon Web Services platform to obtain dynamically linked content from multiple sources and display in on the physical object. Then the HoloLens’ aggregated depth sensing data will generate environment meshes, and then users can see the name and description of the material embedded directly on top of the identified object. Although GCV returns text in English, nouns can be translated effectively by incorporating services such as Google Translate to target multiple languages. Designers and artists are also able to understand how physical objects are made from various materials through short video clips displayed in True Sense. The embeddings of video clips in Mixed Reality that demonstrate usage of this material would promote learning about materials in the wild (Figure 9).

Figure 9.

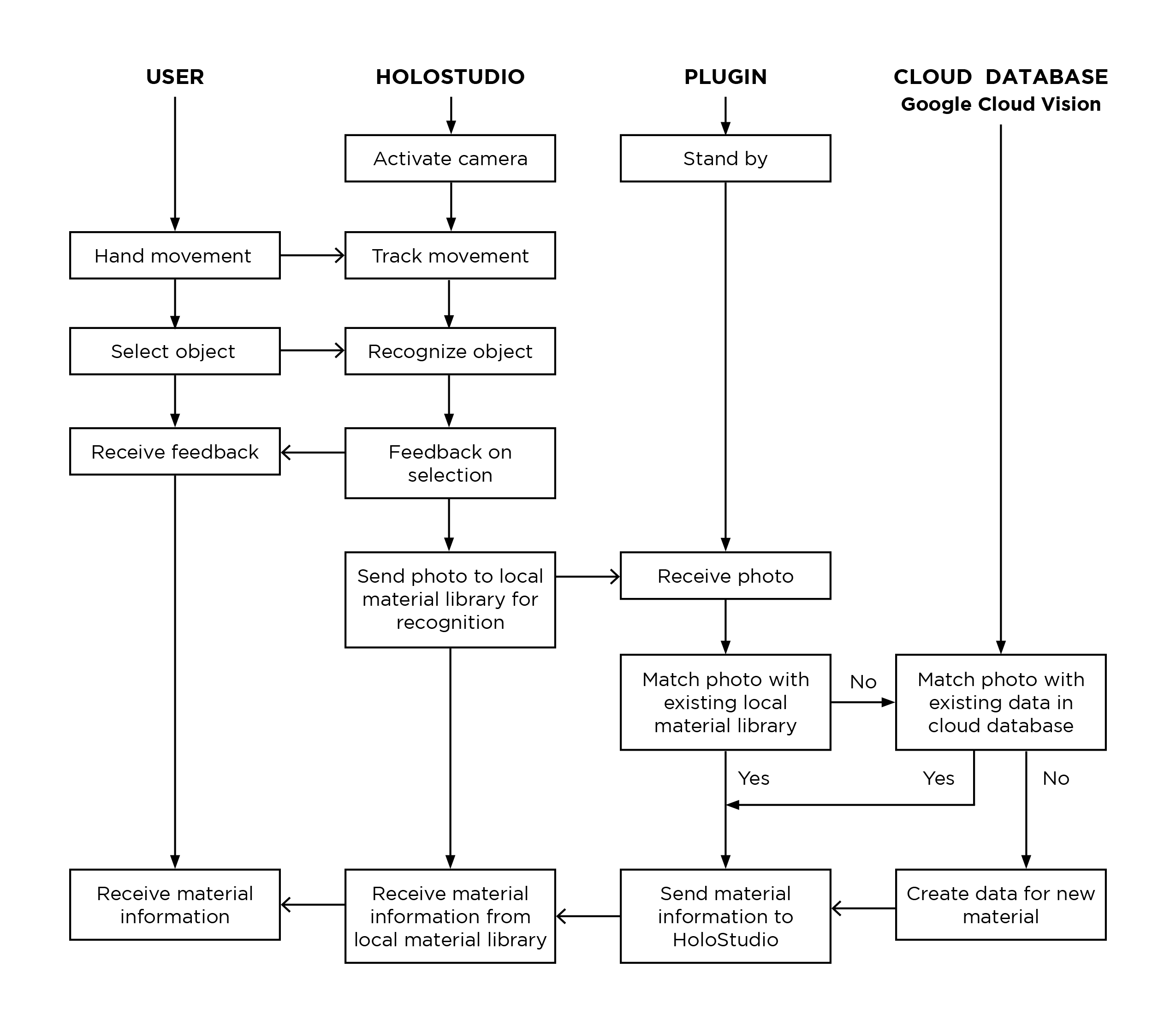

User Journey And System Flow

(Figure 10)

(Figure 10)

Figure 10.

Pseudocode - HoloStudio

Import library for HoloLens

Import library for True Sense Plugin

Import Google Cloud Vision API

Set motion data from HoloLens camera = input

Set still photo = input

Set object data = input

Set description = input

Setup

Turn on the camera for tracking gloves

Draw

Read motion data from hand

if motion data stays on one object

Create outline to highlight the object

Take a still photo of the object

Recognize the object from the photo

Send the photo to Plugin

if the data is received from plugin

Read object data

Print object material and related information on screen

if description is received from plugin

Print “Please describe the object and its material/attributes”

Import library for True Sense Plugin

Import Google Cloud Vision API

Set motion data from HoloLens camera = input

Set still photo = input

Set object data = input

Set description = input

Setup

Turn on the camera for tracking gloves

Draw

Read motion data from hand

if motion data stays on one object

Create outline to highlight the object

Take a still photo of the object

Recognize the object from the photo

Send the photo to Plugin

if the data is received from plugin

Read object data

Print object material and related information on screen

if description is received from plugin

Print “Please describe the object and its material/attributes”

Pseudocode - True Sense Plugin

Import library for HoloLens

Import library for HoloStudio

Import Google Cloud Vision API

Set still photo = input

Set object data = input

Set description = input

Setup

Connect the True Sense Gloves

Draw

Read the still photo from HoloStudio

Search the local material library

if the object is identified

Write the object material and related information to HoloStudio

else

Connect to Google Cloud Vision Service

Use the still photo to initialize the search

if the object is identified

Write the object material and related information to HoloStudio

else

Write request to HoloStudio for more information about this

unidentifiable object

if description is enough to create a data set for the new material

Create a data set for the new material

Import library for HoloStudio

Import Google Cloud Vision API

Set still photo = input

Set object data = input

Set description = input

Setup

Connect the True Sense Gloves

Draw

Read the still photo from HoloStudio

Search the local material library

if the object is identified

Write the object material and related information to HoloStudio

else

Connect to Google Cloud Vision Service

Use the still photo to initialize the search

if the object is identified

Write the object material and related information to HoloStudio

else

Write request to HoloStudio for more information about this

unidentifiable object

if description is enough to create a data set for the new material

Create a data set for the new material

Limitations &

Future Work

Future Work

In the future, we would like to conduct a series of user studies to measure the effectiveness of True Sense and validate the following research questions: Do users feel curious and safe to discover and recognize materials of physical objects in their life? How engaged are designers and artists within mixed reality scenarios? Does haptic feedback improve speed and accuracy when users are making 3D objects in HoloStudio? Moreover, we consider how could we improve human to human interaction by designing social and collaborative features. For example, users can share or exchange individual material library in Mixed Reality. Users can invite other people to explore materials of physical objects in the real world. In addition, we would like to integrate many physical objects into the virtual world, not only for discovering materials, but for controlling virtual tools and object in HoloStudio. Finally, we want to create a more immersive and intuitive experience by delivering tactile sensations in free air without requiring users to wear a physical device, such as gloves. We believe that improvements in haptic technologies and head-mounted devices will lead to more immersive and comfortable user experiences.

Appendix

- Cédric Kervegant, Félix Raymond, Delphine Graeff, and Julien Castet. 2017. Touch hologram in mid-air. In ACM SIGGRAPH 2017 Emerging Technologies (SIGGRAPH '17). ACM, New York, NY, USA, Article 23, 2 pages.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/3084822.3084824 - Christian David Vazquez, Afika Ayanda Nyati, Alexander Luh, Megan Fu, Takako Aikawa, and Pattie Maes. 2017. Serendipitous Language Learning in Mixed Reality. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA '17). ACM, New York, NY, USA, 2172-2179.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/3027063.3053098 - Dimitrios Tzovaras, Konstantinos Moustakas, Georgios Nikolakis, and Michael G. Strintzis. 2009. Interactive mixed reality white cane simulation for the training of the blind and the visually impaired. Personal Ubiquitous Comput. 13, 1 (January 2009), 51-58.

DOI=http://dx.doi.org.offcampus.lib.washington.edu/10.1007/s00779-007-0171-2 - Judith Amores, Xavier Benavides, and Lior Shapira. 2016. TactileVR: Integrating Physical Toys into Learn and Play Virtual Reality Experiences. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA '16). ACM, New York, NY, USA, 1-1.

DOI: https://doi.org/10.1145/2851581.2889438 - Misha Sra, Pattie Maes, Prashanth Vijayaraghavan, and Deb Roy. 2017. Auris: creating affective virtual spaces from music. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology (VRST '17). ACM, New York, NY, USA, Article 26, 11 pages.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/3139131.3139139 - Oscar Rosello, Marc Exposito, and Pattie Maes. 2016. NeverMind: Using Augmented Reality for Memorization. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST '16 Adjunct). ACM, New York, NY, USA, 215-216.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/2984751.2984776 - Samarth Singhal, Carman Neustaedter, Alissa N. Antle, and Brendan Matkin. 2017. Flex-N-Feel: Emotive Gloves for Physical Touch Over Distance. In Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW '17 Companion). ACM, New York, NY, USA, 37-40.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/3022198.3023273 - Satoshi Saga. 2015. Lateral-force-based haptic display. In SIGGRAPH Asia 2015 Haptic Media And Contents Design (SA '15). ACM, New York, NY, USA, , Article 10 , 3 pages.

DOI=http://dx.doi.org.offcampus.lib.washington.edu/10.1145/2818384.2818391 - Takafumi Aoki, Hironori Mitake, Shoichi Hasegawa, and Makoto Sato. 2009. Haptic ring: touching virtual creatures in mixed reality environments. In SIGGRAPH '09: Posters (SIGGRAPH '09). ACM, New York, NY, USA, , Article 100 , 1 pages.

DOI=http://dx.doi.org.offcampus.lib.washington.edu/10.1145/1599301.1599401 - Tom Carter, Sue Ann Seah, Benjamin Long, Bruce Drinkwater, and Sriram Subramanian. 2013. UltraHaptics: multi-point mid-air haptic feedback for touch surfaces. In Proceedings of the 26th annual ACM symposium on User interface software and technology (UIST '13). ACM, New York, NY, USA, 505-514.

DOI: https://doi-org.offcampus.lib.washington.edu/10.1145/2501988.2502018